Vector Databases: What They Are and How They Power AI

If you’re diving into AI and LLMs, you’ve probably heard about vector databases. But what exactly makes a vector database different from your everyday SQL or NoSQL solution? And how do they fit into the AI/ML ecosystem?

In this post, we’ll explore the application of vector databases, particularly in the context of AI and machine learning workflows.

What Are Vector Databases?

Traditional databases store data in structured (SQL) or semi-structured/unstructured (NoSQL) formats, optimised for querying text, numbers, and relational data. But AI applications—especially those using embeddings—need something different.

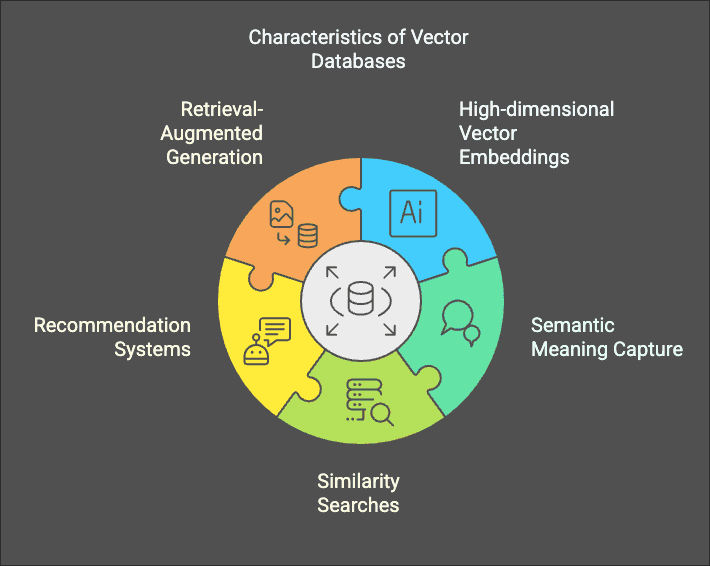

Vector databases store high-dimensional vector embeddings generated by AI models (e.g., OpenAI’s text-embedding-ada-002). These embeddings capture semantic meaning, allowing for efficient similarity searches, recommendation systems, and retrieval-augmented generation (RAG) in LLMs.

Instead of exact matches (like SQL queries), vector DBs use distance metrics (cosine similarity, Euclidean distance, etc.) to retrieve the most relevant data points based on context.

Why Not Just Use PostgreSQL or MongoDB?

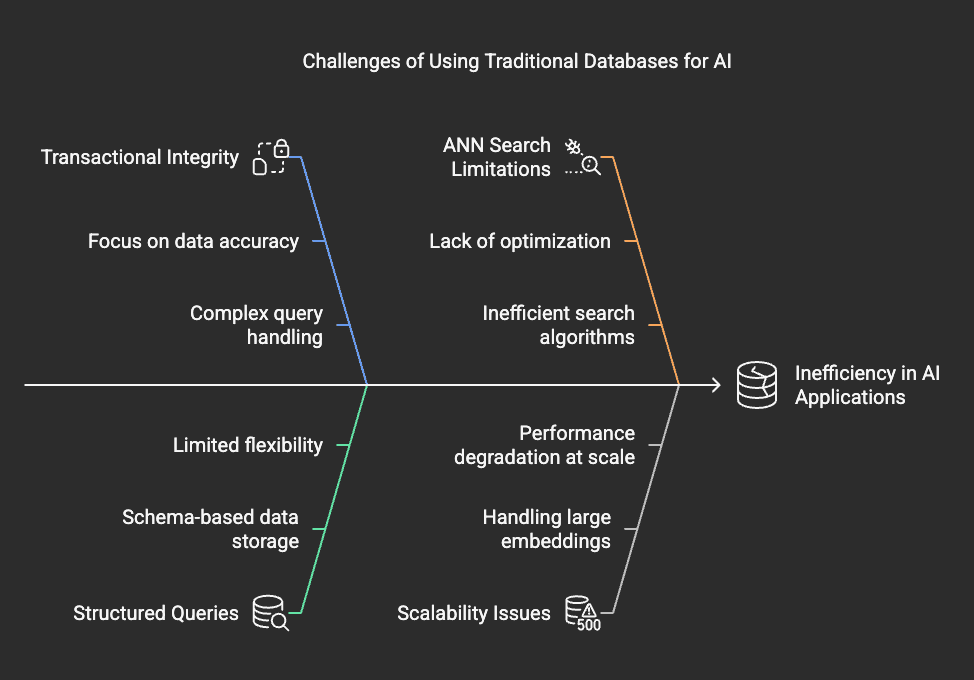

Can’t you just store vectors in a traditional database? Technically, yes. PostgreSQL’s pgvector extension and MongoDB’s Atlas Vector Search offer some capabilities, but they aren’t built from the ground up for vector-heavy workloads.

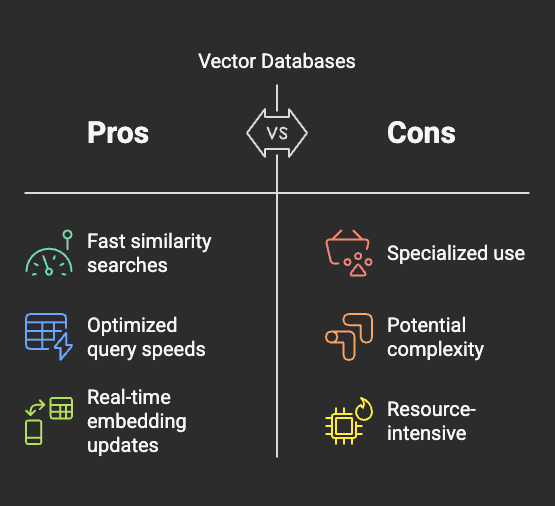

Traditional DBs:

- Optimised for transactional integrity and structured queries.

- Can store vectors but aren’t inherently designed for fast, approximate nearest neighbor (ANN) searches.

- Struggle at scale with large embeddings (millions+).

Vector DBs:

- Designed for fast similarity searches and large-scale AI applications.

- Support ANN algorithms (FAISS, HNSW, IVF, etc.) to optimise query speeds.

- Efficient at handling real-time embedding updates for dynamic AI systems.

\

Popular Vector Database Solutions

Here are some standout vector databases, and some short examples of how to use it with Langchain.

1. Pinecone

- Fully managed, scalable vector search.

- Real-time updates, metadata filtering, and hybrid search.

- Direct LangChain integration for seamless AI retrieval.

Example: Storing and retrieving embeddings with Pinecone + LangChain.

from langchain.vectorstores import Pineconefrom langchain.embeddings.openai import OpenAIEmbeddingsimport pineconepinecone.init(api_key="your-api-key", environment="us-west1-gcp")index_name = "my-langchain-index"vectorstore = Pinecone(index_name, OpenAIEmbeddings())query_result = vectorstore.similarity_search("What is vector search?")print(query_result)

Weaviate

- Open-source and cloud-hosted options.

- Offers hybrid search (text + vector search combined).

- Schema-free, making it highly flexible for AI applications.

Example: Inserting and searching data in Weaviate.

import weaviateclient = weaviate.Client("http://localhost:8080")client.schema.create({"class": "Article", "vectorIndexType": "hnsw"})client.data_object.create({"text": "Introduction to AI"}, "Article")query_result = client.query.get("Article", ["text"]).with_near_text({"concepts": ["AI"]}).do()print(query_result)

ChromaDB

- Open-source, lightweight, and Python-first.

- Great for local or embedded vector search.

- Ideal for fast prototyping and personal AI apps.

Example:

from langchain.vectorstores import Chromafrom langchain.embeddings.openai import OpenAIEmbeddingsvectorstore = Chroma(collection_name="my_collection", embedding_function=OpenAIEmbeddings())query_result = vectorstore.similarity_search("What is vector search?")print(query_result)

4. Milvus

- High-performance, enterprise-grade vector database.

- Supports distributed indexing and scaling.

- Used in AI-heavy workloads (e.g., autonomous driving, recommendation engines).

5. FAISS (Facebook AI Similarity Search)

- A library rather than a standalone database.

- Highly optimized for fast ANN search on massive datasets.

- Often embedded into other solutions like Pinecone or Weaviate.

How Traditional Databases Are Adapting

Big players in the database world aren’t sitting idle. Many are adding vector search features to their existing platforms to support AI-driven applications.

PostgreSQL (pgvector)

- Adds vector search to PostgreSQL.

- Works for smaller-scale applications but not optimised for massive datasets.

MongoDB Atlas Vector Search

- Integrates vector search into MongoDB’s document model.

- Supports fast ANN queries alongside standard NoSQL queries.

Google BigQuery ML

- Allows storing and querying embeddings.

- Integrates with Google’s AI services for large-scale analytics.

Amazon RDS (via KNN indexes)

- AWS offers k-Nearest Neighbours (KNN) indexes for approximate vector search.

- Best suited for enterprises already using RDS.

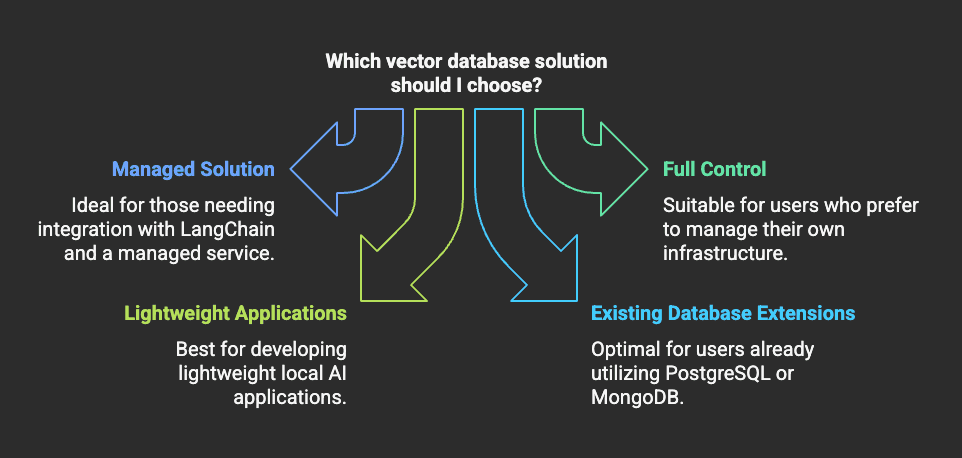

Which One Should You Use?

As AI applications scale, vector databases are becoming crucial for efficiently retrieving and using embeddings in LLM workflows. Whether you’re building a chatbot, recommendation system, or AI-powered search, choosing the right vector database is key to unlocking the full potential of your data.

Which one are you most excited to experiment with? Let’s discuss!

Keep Exploring

\ How vector databases power AI search

Mongo Atlas Vector Search Quick Start

Share

Related Posts

Legal Stuff